I know…

‘We can’t even do Cloud properly’ argument is not going to win an award.

You might want to keep it as a card anyway.

Because while many organizations are still struggling to fully leverage the cloud—an established technology—boards and shareholders are banging the AI drum as if it’s a silver bullet.

If you’re a senior manager, CEO, or product leader, you’re probably stuck between the fear of missing out (FOMO) and the pressure to deliver impossible AI-driven wonders. Before you cave to the hype, let’s cut through the noise.

The Pressure Is Real—and Not Always Rational

Decisions are rarely made in a vacuum.

If your competitor invests in AI and gains an edge in cost savings or customer engagement, you’re forced to follow suit—or risk falling behind.

It’s classic game theory: you might not love the move, but letting someone else get a head start feels worse.

The actions of Apple and Google in the last few months were game theory unfolding in real time. Apple has been dogged by rumors of some grand “Apple Intelligent.” It got the highest praise before the “AI” update was released:

Apple tallied yet another all-time high share price Monday after a pair of investment firms meaningfully hiked their price targets for the stock, the latest positive push for Apple stock ahead of the hotly anticipated release of generative artificial intelligence iPhones.— Forbes

This meant reassuring both investors and loyal fans that the company wasn’t lagging behind OpenAI or Microsoft.

Post-released, Apple faced backlash for producing false news summaries, such as incorrectly stating that a murder suspect had taken his own life, facing criticism of Apple's intelligence as “magically mediocre.” Or the ethical concerns voiced by Elon Musk.

You might also recall the shaky debut of its AI-led Google Search Assistant upgrades out of the fear that nimbler rivals, like Perplexity, are eating Google’s breakfast, lunch, and dinner.

Critics accused Google of delivering dangerously inaccurate results, such as suggesting glue as a pizza ingredient, recommending eating rocks for nutrition, and other irresponsible AI responses.

Both Apple and Google found themselves in a bind, propelled by the fear of losing to their competitors in the AI arms race.

They presented real-world game theory examples. It’s not that they fully believe in their new product, but if there’s even a small chance that their competitor’s move will give them an unassailable lead. So they feel compelled to act, no matter how messy or unfinished the offering might be.

Of course, the subpar AI products backfired.

Must Haves For A Successful Tech Revolution

I have covered this topic many times now. For example,

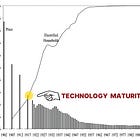

Many people imagine that today’s AI can do what AGI promises. They suppose AI is logical, can solve complex problems, and adapt seamlessly across contexts. History demos what elements are needed for an invention to be a success:

Tech maturity matters. Then you need Infrastructure → Platforms → Applications happen in the exact sequence.

Success examples, e.g., telephone. Bell’s breakthrough in how people could talk (1870s’) via cables and the initial rollout relied on the telegraph network. Then, automated exchanges were invented, so telephones became practical in homes and businesses.

Failed examples, in case the Apple Intelligent and the Google Search Assistant ones weren’t enough.

Electric Cars (1900s): Inefficient battery technology + no charging network → limited adoption for over a century.

Google Glass (2013): AR without viable platforms → consumer rejection → limited adoption until Meta + Ray-Ban

Which category does AI fall into entering 2025?

Listen to this episode with a 7-day free trial

Subscribe to 2nd Order Thinkers to listen to this post and get 7 days of free access to the full post archives.