What do you think creativity is?

I see creating a process, and I've discussed it here. You start by generating an idea, then execute it, refine it, and finally deliver it.

Since 2022, how many of us have already relied on AI in part of our creation process, especially to generate ideas or to brainstorm?

Media, academics, and people I spoke with, including myself, all thought that one of the best uses of AI is to brainstorm ideas with. Some quick stats:

90% of creators also said that they believe generative AI tools can help create new ideas.— by Adobe

In our study, 100% of participants found AI helpful for brainstorming. Only 16% of students preferred to brainstorm without AI.— sc.edu/

GPT-4 ranked in the top percentile for originality and fluency on the Torrance Tests (the most widely used and validated divergent-thinking tests) of Creative Thinking.— University of Montana

What if I told you that the truth might actually be the opposite?

I want to bring three of the latest studies to your attention today that will answer the following questions:

Can LLM really help us think outside the box? Or does it silently put our imaginations in a box?

If AI is only a comfort zone enhancer, what does it mean for those of us who have relied on AI for brainstorming over the years?

Is there anything we can do… to escape this imagination trap?

Shall we?

Originality vs. Diversity Are Not the Same Thing

Let’s clarify some terms before we begin.

This is important for understanding (and for me to explain the studies to you properly) exactly what these researchers truly mean and are trying to communicate.

Originality is like that one extraordinary dish a chef discovers, let’s say, a fusion of unexpected ingredients that no other restaurant offers. It might be a Korean-Mexican-Turkey fusion taco with lamb kebab topped with house-made kimchi that nobody else has thought to combine.

This dish might stand out because it's statistically rare compared to burgers or pasta.

BUT, keep in mind, originality ≠ high quality.

Diversity is more like a restaurant that doesn't need any single revolutionary dish but rather a breadth of options with a menu that caters to everyone. You might see Swedish meatballs, Southern fried chicken, and Chinese fried rice as the main course…

You can be individually original (each answer is “uncommon”) and collectively repetitive (all your answers circle the same “novel” concept), or unoriginal while collectively diverse.

Proven GenAI Writing Lacks Diversity?

You can skip this one if you’ve read my analysis two weeks ago.

But today, my focus in this article is different. Rather than whether AI impacts how we think, AI-generated content was far less diverse than content created solely by the human brain.

If you haven’t read the Your Brain on ChatGPT study and you don’t think you’d have the time (or patience) for the 204-page research, read or listen to my 20-minute summary. The PDF of this study, with my highlights and handwritten notes, is available. Just reach out, reply, or message me, and I will send you a copy.

They used several NLP techniques to compare essays:

n-gram overlap (how much the wording is repeated),

Named Entity Recognition (e.g., number of unique names, places, etc.), and

“topic ontology” (basically, how different the essay topics were, structurally).

The researchers did find that LLM-generated essays were, on the surface, more similar to each other, sharing more of the same entities, word patterns, and structures.

So, Was Diversity Lower With LLMs?

In a way, yes.

This MIT study found that people using ChatGPT tended to generate essays that were more similar to each other. At least in the n-gram and named-entity analysis the researchers looked at. The summary table even says the “distance” between LLM essays was “not significant” at times, especially when participants just copy-pasted and barely edited.

BUT!

The effect wasn’t always dramatic, and there’s a lot of nuance.

Their measure focused on the form, not the substance.

Meaning, you could write 10 essays about happiness using totally different phrasing but all arguing “helping others is good”, and their n-gram/NER analysis might say you’re “diverse,” but you’re actually repeating the same concept.

Or vice versa: essays might use the same few words but come to totally different conclusions.

In plain English, you can think of this as judging a restaurant’s menu by how many different spices they use instead of the flavors that actually hit your tongue.

Did They Measure “Originality”?

They do talk about “homogeneity” and similarity of content, but originality… as in, “is this idea rare or novel?” wasn’t really the metric. It’s all about how different the essays are from each other, not from the broader population.

Only one sentence mentioned it… and with some uncertainty:

This could translate behaviorally into writing that is adequate (since most of them did recall their essays as per the interviews) but potentially lacking in originality or critical depth.

Overall, this isn’t enough to prove that the LLM-generated essays were less diverse in ideas or creative thinking.

Let’s see what other studies have to say?

Is ChatGPT More Creative Than One Human?

A landmark study: An empirical investigation of the impact of ChatGPT on creativity in Nature Human Behaviour (Lee & Chung, 2024)

Don’t worry; this experiment was refreshingly straightforward than the MIT one.

They wanted to find out if ChatGPT is more creative than humans. So they designed six different challenges, three of which are examples:

Repurposing a tennis racket and garden hose (Study 2a)

Creating a toy using a brick and fan (Study 2b)

Repurposing a flashlight and hair spray (Study 5)

Across six different creativity challenges, participants (between 100 and 200 people).

Similar to the Your Brain on ChatGPT study, the researchers also split the participants into three groups:

Worked solo with no assistance,

Use a web search (Google) for inspiration,

and a third group got to consult ChatGPT (GPT-3.5) while brainstorming.

In each situation, the person would present one idea as their final answer. After that, independent evaluators would assess each idea, usually by judging how creative it was by looking at how original and useful they thought each idea was.

Did they come to a similar conclusion?

They found that originality scores were significantly higher in the AI-assisted condition compared to those who only used a search engine or their brains alone.

Other key findings:

ChatGPT enhances individual creativity compared to web search and unaided brainstorming

Ideas generated with ChatGPT assistance scored higher on originality and appropriateness scales than ideas by humans or via a search engine.

ChatGPT particularly excelled at incrementally creative ideas rather than radically novel ones

Contrary to the belief, post-generation human edits did not further increase creativity beyond raw ChatGPT output.

BUT!

The original study's design had a fundamental flaw: it treated each participant as an independent unit without considering it.

What happens when multiple people use the AI tool simultaneously? Will the participants come up with a similar or diverse range of ideas?

So instead of asking, "Does ChatGPT make individual ideas more creative?" the next group of researchers, Meincke et al., decided to use the exact same experiment but asked: "Does ChatGPT make groups of ideas more diverse?"

Why not?

We all love a great showdown, researchers pulling each other’s hair.

Is ChatGPT More Creative Than A Group of Humans?

This study: ChatGPT Decreases Idea Diversity in Brainstorming, by The Wharton and UPenn, 2025.

They started by questioning the definition of originality.

Originality, however, is not just a property of an individual idea…

but also capture how similar or unique the ideas are relative to one another.

Do you still remember this task from the last study?

Repurpose unused household items, old tennis racket and garden hose.

Here's an example to tell you the difference between only looking at the individual answers vs. evaluating ideas collectively.

Now, one set of ideas is to reuse hoses as: “Lawn sprinkler,” “Water sprinkler,” “Garden sprinkler.”

Whereas another set of human-generated ideas looked like this: “Garden furniture,” “Storage rack,” “Picture mount.”

Hmm. Do you see the theme?

For the first set of ideas, every idea, when evaluated on its own, may be original; however, if you evaluate across that set, it's not difficult to see that they are the exact same concept: sprinklers.

For the second set, each idea is pretty novel on its own, and importantly, each is conceptually distinct from the others.

The first set is exactly what the researchers observed in the ChatGPT-assisted brainstorms.

Individually, people’s AI-powered ideas were often judged quite creative. But when you looked at the pool of all ideas coming from AI users, a lot of them clustered around the same concepts. In the tennis racket + hose challenge, “sprinkler” ideas were everywhere.

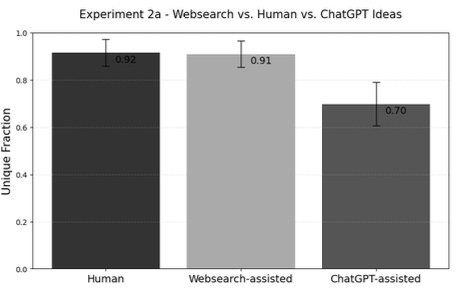

In Study 2A (repurposing a tennis racket and garden hose), 20 out of 96 ChatGPT-assisted ideas included the word "sprinkler," compared to only 7 in the web search group and 12 in the brain-only group.

This represents approximately 21% of all ChatGPT responses focusing on the same basic concept. Hence, you can see the unique fraction in the screenshot below is lower.

The analysis of Study 2B is even more dramatic.

The participants were asked to create a toy using a brick and a fan.

This study showed the most extreme lack of diversity in the entire research project.

Only 6% of those ChatGPT-only ideas were unique; 41.2% were unique when ChatGPT was combined with human input.

Whereas human-only ideas were 100% unique!

From a traditional brainstorming standpoint, that’s not great.

The whole value of brainstorming is to produce a mosaic of ideas, allowing us to have a wide assortment of perspectives to choose from.

If everyone shows up with just variations of the same idea, you’ve likely uninvited them in the next recurring brainstorming session. As the authors nicely put it,

As original as transforming a tennis racket and garden hose into a sprinkler may be, successful brainstorming would yield a mosaic of unique perspectives — not just a lineup of sprinklers.

So, we have a paradox.

With ChatGPT, each individual’s idea might be more creative than it would have been otherwise (a win for quality), but the group’s collection of ideas becomes less diverse (a potential loss for exploration).

So until then… this series of research was done with GPT 3.5, one of the earlier models that is no longer available.

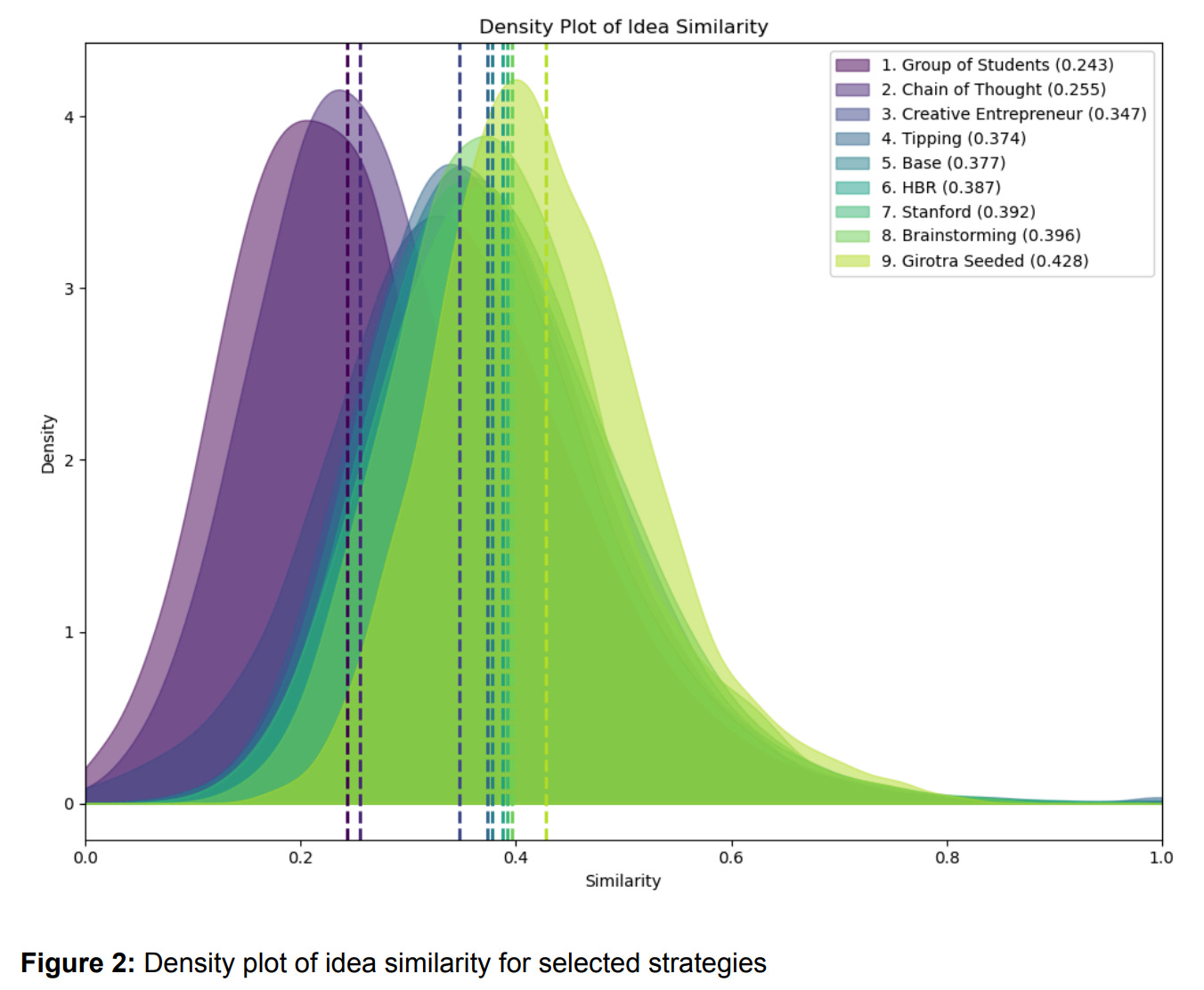

This team quickly published another study, which aims to see if using Chain of Thoughts can improve the concept of diversity in AI.

The figure below shows that the latest LLM models are actually capturing ideas from the chain of thoughts that are becoming increasingly diverse, closer to the diversity of ideas in the human brain.

Beyond us, they also found that using different LLM models would likely lead to receiving different answers.

I had a call with Lennart Meincke (one of the authors) on Monday, and he gave me a very interesting analogy.

If you keep asking the same person what they enjoy eating, you’ll likely get the same or similar answers. Only by asking someone else (another LLM or using prompt variations) are you likely to get different responses.

More Evidence: Does AI Help or Hinder Creative Diversity?

The findings above didn’t occur in isolation. In fact, they rhyme with other recent research examining how generative AI influences creativity.

AI in Story Writing (Doshi & Hauser, 2024). The researchers had ~300 people write ultra-short stories (just 8 sentences) for a young adult audience.

Participants were split into three groups:

One got no AI help,

One could ask ChatGPT for a single 3-sentence story idea to build on,

And one could have ChatGPT generate up to five ideas and pick their favorite as a starting point.

Below is the screenshot of the experiment setup.

They found that the more GenAI ideas writers had, the higher their stories scored in terms of creativity. A comment from the authors:

our results suggest that generative AI may have the largest impact on individuals who are less creative.

The AI suggestions helped level the playing field by improving their output to be on par with the naturally creative folks.

So far, so good

However!

You guessed it, there was a downside.

The researchers also analyzed the similarity of the stories using embedding-based metrics. They found that stories from the AI-aided groups ended up more similar to each other than those from the non-AI group.

If writers were only allowed one AI-generated idea, it would lead to a 10.7% increase in similarity among their stories compared to stories written with no AI at all. As you can see, there is a shift to less diverse ideas in the screenshot below.

One of the study authors stated:

if the publishing industry embraced generative AI widely, the collective pool of stories would likely become more homogeneous, less unique in aggregate.

So what’s great for an individual author’s creativity could become a problem when everyone is using the same tool, especially when the anchoring effect happens:

anchoring the writer to a specific idea, or starting point for a story, generative AI may restrict the variability of a writer’s own ideas from the start, inhibiting the extent of creative writing.

There’s even a worry of a “downward spiral”.

If AI-assisted stories are rated as more creative and thus become more popular, writers have an incentive to use AI more, which further reduces collective novelty, and so on.

Challenging Your Assumptions

You might be thinking, “Alright, AI can make me more creative individually but make us less creative collectively. Case closed?”

Well… not quite.

Allow me to throw some second-order questions at you.

Are we measuring the right thing?

Human–AI collaboration is more than one-shot prompts.

Creativity over time, but the missing long view?

Are we measuring the right thing?

Keep in mind, statistical significance ≠ practical significance.

Assuming GenAI causes a 10% drop in the number of unique ideas in a typical session. Is that a game-changing loss, or just a minor dip that a team could easily compensate for?

Imagine you still get, say, 18 distinct ideas instead of 20, would the brainstorming outcomes be meaningfully worse?

Some authors obviously believe diversity is critical (and indeed, it is in many settings). However, they didn’t measure whether the final solutions or decisions in a group would suffer from that reduced diversity.

For example, if AI helps everyone converge on a really effective idea (like the sprinkler solution, which arguably is a pretty clever reuse of a racket and hose), maybe that convergence isn’t so bad after all.

Novelty, for novelty’s sake, isn’t always the goal.

What you should ask is, when does a 20% reduction in unique concepts translate to a worse outcome (failed innovation, missed opportunity)? As an open question.

Human–AI Collaboration is More Than One-Shot Prompts.

The experiments we have so far largely treat AI assistance as a single interaction: the person asks for ideas, the AI responds, and, the end.

In reality, creating with AI is typically an iterative process.

You might get an idea from ChatGPT, then critique it, refine it, or use it as a springboard for something entirely different.

Or that you might prompt the AI in specific ways (“Give me something more radical. Now something more practical. Okay, combine those.”). Essentially, playing director to the AI actor. None of the studies captured the rich back-and-forth that most users do in the real world.

It’s possible that if used in an iterative loop, AI tools could actually increase diversity: for example, you can deliberately ask the AI for ideas in different styles or domains each round for a spread of concepts.

For example, in a one-shot scenario, ChatGPT would give you the most straightforward, clever idea (hence, multiple people get sprinklers).

But if you explicitly say, “Give me 5 completely unrelated approaches to this problem,” GPT-4 might surprise you.

All good and well in theory… many of the same ideas are still essentially one idea.

Creativity Over Time, Missing The Long-Term View.

One aspect hardly touched by these studies is what happens after the initial idea. Creativity is a process.

An idea that seems derivative at first can evolve into something unique with development. Or, an original-sounding idea might fizzle out or converge towards a similar implementation as others when you actually build it.

We’ve seen many human ideas that hit dead ends and never went anywhere.

The point is that initial diversity is just one snapshot in time. What we really care about is the diversity of final outcomes or solutions that reach fruition.

It would be fascinating to see a longitudinal study where teams take initial ideas (AI-assisted or not) and develop them over months to see if the AI-spawned ones exhibit less variety in the end products.

From “Does AI Harm Creativity?” to “How Can AI Enhance Creativity?”

Was our framing of this discussion fair?

Much discussion has an implicit adversarial tone, as if AI and human creativity are at odds. The framing makes sense during the investigation, yes, but in reality, we need:

Can we co-create with AI rather than co-copy-pasting?

Perhaps it's about pairing human strengths and AI’s strengths optimally.

Depending on the creative goal: exploration vs. exploitation, breadth vs depth, innovation vs optimization.

For exploration…

Given what we’ve learned, we now know that humans excel at thinking outside the box (truly weird, novel, context-shifting ideas) with nuances like emotional resonance or cultural context.

AI excels at combining vast knowledge and ensuring outputs meet certain criteria (staying on topic, being coherent, etc.). ChatGPT (or any GenAI), after all, is a statistical mirror of human knowledge and language.

So, in an ideal workflow, AI generates a bunch of ideas, the human could pick a left-field one and say “AI, now riff further on this unusual idea,” and keep alternating using the AI both for going broad and deep.

As for exploitation, convergent ideation (focusing on one solution) might actually beat divergent thinking. Millions of human minds in its training data might have converged on similar ideas for a good reason.

Especially in practical, time-sensitive scenarios, AI’s style of “creative convergence” might sometimes be more valuable than creative divergence.

For example:

Troubleshooting. If a machine breaks down in a factory, quickly converging on the most likely fix (rather than brainstorming for exotic solutions) saves time and money

Or the execution stage of projects. Once a range of ideas has been explored, teams often need to converge on a single plan for implementation

Still, when our focus is on creativity rather than execution, when everyone uses the same powerful wingman, the result can be flocks of thinkers circling the same idea, no matter how novel it seemed at first.

Just as those studies pointed out, diversity and originality can suffer if we’re not careful.

This doesn't seem catastrophic, since we’re not all writing identical robot-produced novels yet, it’s noticeable and measurable.

You likely have your fair share of spotting the AI-generated text moment.

So, how do we co-create with AI rather than co-copy-pasting?

Well… everything should be tailored to your own process, so I refrain from giving you a simple answer. But the three principal steps for you to ponder:

Always be very clear about your goal. Most people fail at this step.

Write it down, frame your idea around it, and delay the use of AI.

Less is more, always. Relentlessly delete ideas and output from AI.

As Lennart Meincke said:

AI is great at generating many ideas quickly…

but it can steer you down a specific path too early in the brainstorming process.

It’s often better to come up with a few initial ideas on your own (choosing the problem first), and then use AI to rapidly refine and explore those ideas further.

Stay balanced and intentional.

Share this post