What if I told you we’re building virtual people to understand ourselves better?

What would you do if you had control of a world where AI Sims were real enough to live, work, throw parties, and even gossip about their neighbors? A sandbox where you could test your boldest, craziest ideas on a world that doesn’t exist—without the protests, disasters, or Twitter meltdowns.

You could see what happens if you marry that person, have kids, or quit your job to move to Bali. Or go bigger—test universal basic income, slap tariffs on imports, or redesign traffic systems to stop rush hour madness.

No risks. No regrets. Just answers.

That’s exactly what a team from Stanford and Google is building: a crystal ball powered by AI.

When I First Heard About Smallville, I Couldn’t Stop Thinking About The Sims

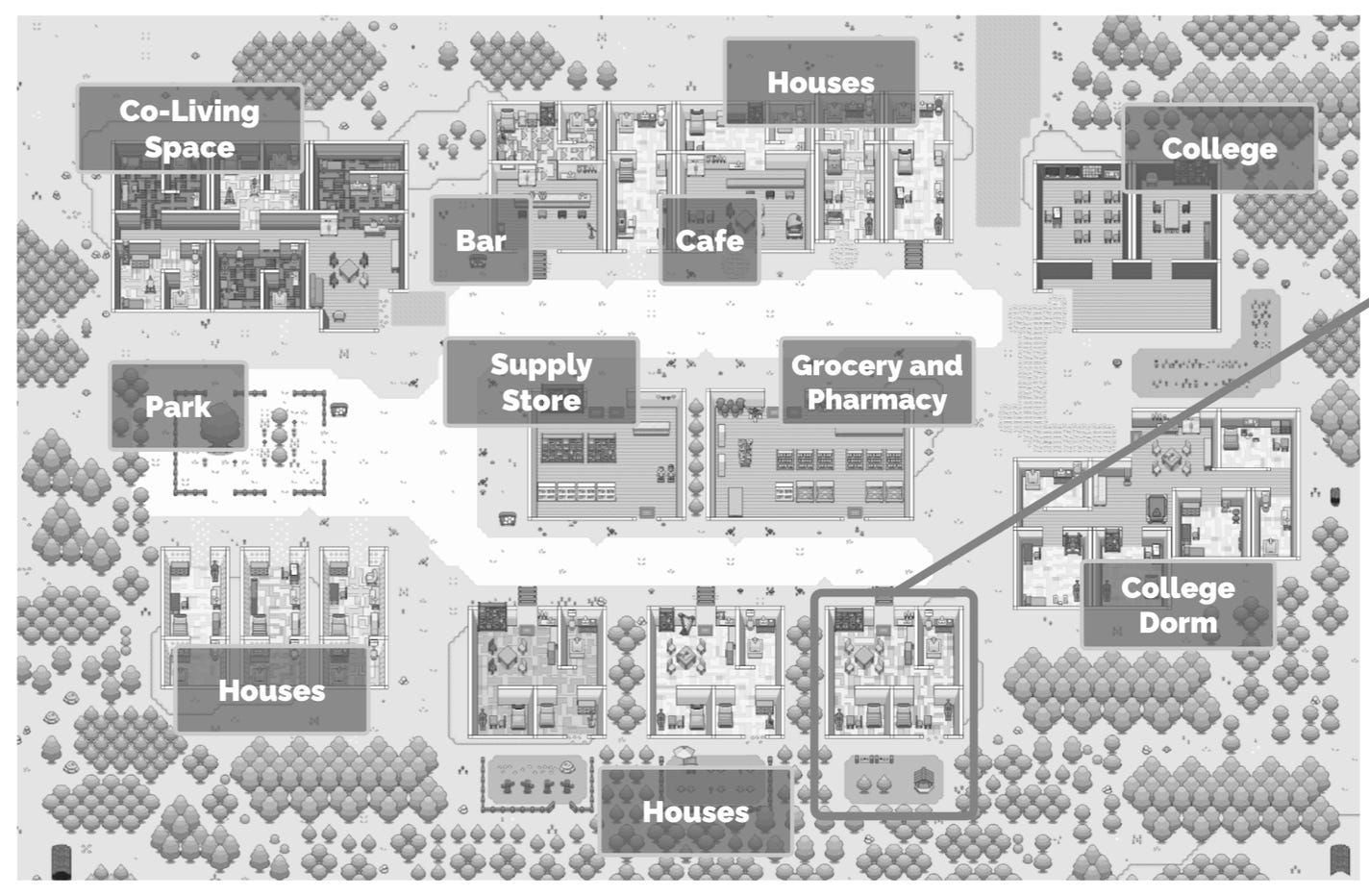

You know The Sims—that game where you build dream houses, get rich, and start fires on purpose just to see what happens. The researchers started with something like that—a tiny digital village called Smallville.

Where 25 Sims lived human-like lives, complete with work routines, party invites, and unexpected drama. But here’s the twist: these Sims weren’t scripted NPCs. They acted spontaneously, closer to you and me than any game character has ever come.

Then, one year later, the same team scaled up the village. In their latest experiment, they created 1,052 AI agents, each modeled on real people, to simulate society at scale.

These agents didn’t just act—they thought, adapted, and behaved — like us. They remembered conversations, built relationships, and made decisions that rippled through their world in ways no one predicted.

We’ve Always Wanted to Simulate Our World.

Humans have been obsessed with simulations for centuries. Why? Because life is messy, unpredictable, and—dangerous.

If we had tools like Smallville in the past, maybe we could have avoided history’s worst experiments. Imagine Mao testing his China’s Cultural Revolution plans in a sandbox first. Maybe millions wouldn’t have starved.

Or what if David Cameron would have a chance to sandbox the Brexit votes, or maybe Trump could simulate the ripple effects of slapping tariffs on imports?

But let’s be real: If politicians were logical, they’d find a better-paid job w/o taking bribery ;)

What you can expect from this article…

Smallville: When God Finally Breathed into Adam (the 2023 paper in plain English)

What if we scale it from 25 to 1000 population? (the 2024 Nov. paper in plain English)

A crystal ball for the real world

The big question: Are the AI agents cost-effective, and are we simulated?

Why Is It So Hard To Simulat Humans and Society?

We’re chaotic and unpredictable.

We forget things, change our minds, and sometimes act like complete idiots. You surely know someone binge-watching cat videos instead of doing whatever they are supposed to.

For decades, every attempt at simulating humans fell flat. Early models treated people like chess pieces, following rigid rules. Sure, we have tried to simulate traffic flow or economic patterns or how Covid would likely to spread, we got most of those with a huge error margin.

The Smallville AI agents in these two papers aren’t just reacting; they’re remembering conversations, reflecting on past actions, and acting in ad hoc situations. For example, the AI agent will fix the pipe when you (a naughty God) break it before they step into the bath.

Building this took more than just bigger computers. It took massive breakthroughs in natural language processing (NLP), memory architecture, and behavioral modeling.

Fifty years ago, we didn’t have the tools to build this kind of human-like behavior. Early simulations, like agent-based models from the 1970s, by Joshua Epstein. They couldn’t account for why people choose or how relationships and emotions ripple through a community.

Now, thanks to large language models (like GPT-4), we’re building Sims who don’t just move through the world—they live in it. They form relationships and spread gossip that is deemed messy. It’s dynamic.

And it’s the closest we’ve come to creating virtual people who think and behave like us. It’s not perfect, but it proves we’re finally moving past robotic simulations to something that feels real.

Smallville: When God Finally Breathed into Adam (the 2023 paper in plain English)

It all began with a tiny virtual town called Smallville. 25 AI Sims lived in houses, worked jobs, and even threw parties.

They weren’t just NPCs on a script. They had memory, reflection, and planning—just like us.

Here’s a question: what makes you “you”?

Is it your personality? Your choices? Or is it the sum of everything you’ve experienced?

In Smallville, the researchers gave AI sim memory—something no NPC in a video game has ever really had.

Their actions feel natural and grounded—like talking to a friend who remembers your last conversation instead of a chatbot parroting back generic answers. It’s a step closer to capturing a human life's messy, dynamic flow.

Behind The Scene.

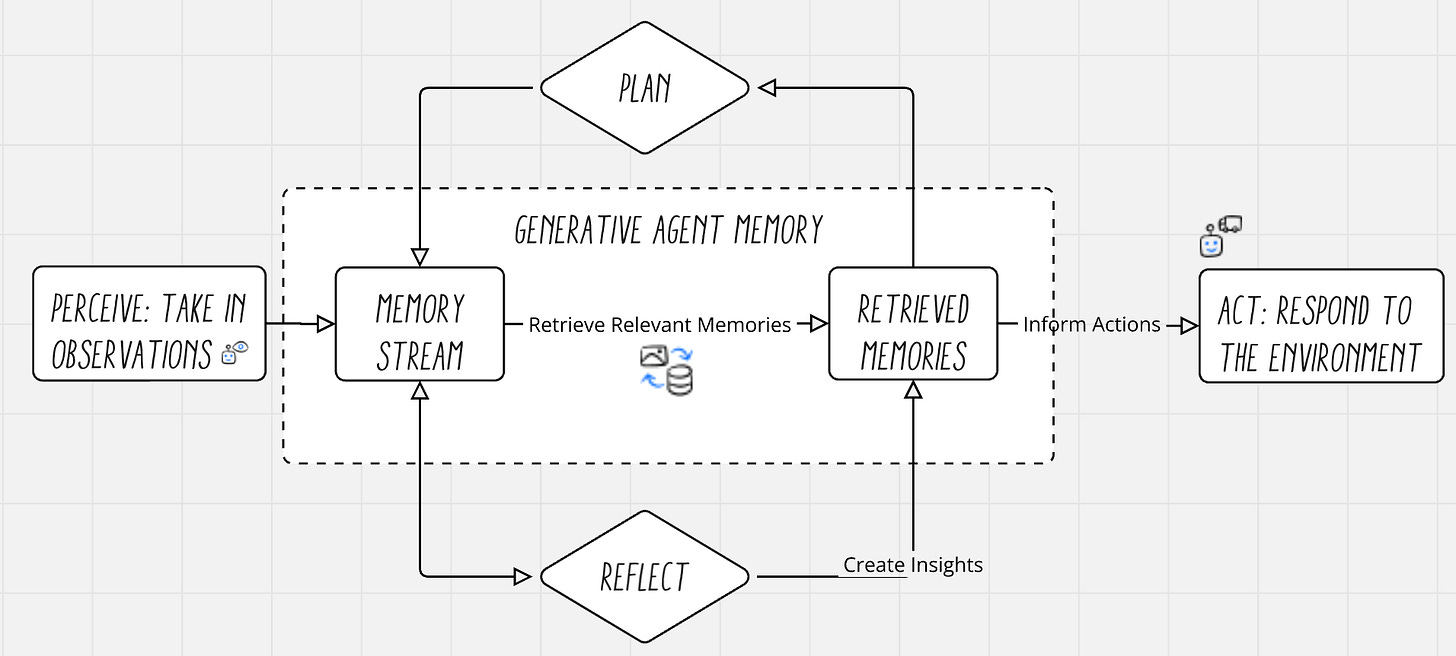

Think through this with me.

You’re cooking dinner, and you notice the pot of water on the stove is about to boil over. You reach out to lower the heat—observation. While you’re stirring, you think,

Next time, I’ll use a bigger pot so this doesn’t happen again.

That’s reflection. And then you plan:

Tomorrow, I’ll grab a larger pot before starting dinner.

Observing, reflecting, and planning is something we all do instinctively, whether it’s in the kitchen, at work, or even managing our social lives. And it’s exactly how the agents in Smallville operate.

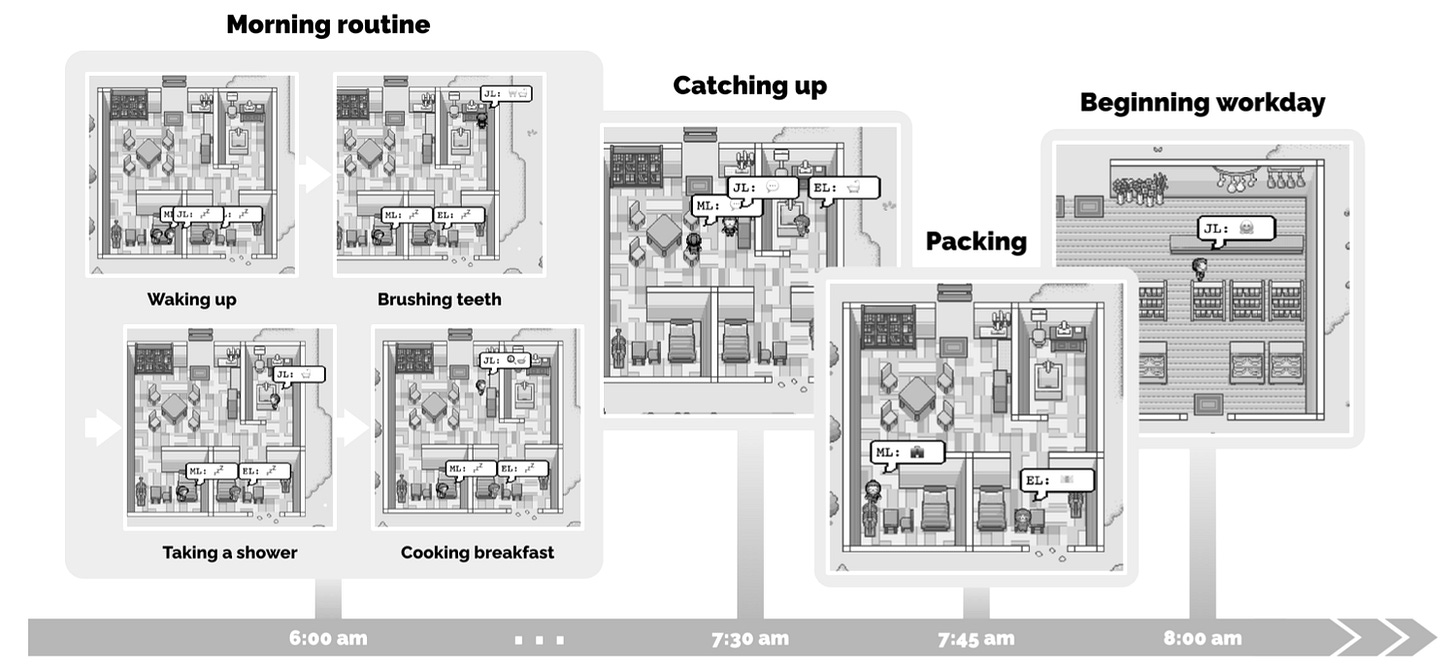

First, they observe the world around them. If Isabella (an agent) sees that her stove is on fire (a playful tweak by the researchers), she doesn’t ignore it. She turns it off.

Next, they reflect. This is where things get a bit philosophical. Agents process their experiences into high-level insights. Klaus (an agent), the academic, might reflect on all the hours he’s spent studying and realize,

I’m passionate about urban research.

It’s a subtle but profound step—turning data into meaning.

Finally, they plan. This is where the magic comes together. Agents map out their day with detailed plans that adapt to new information. When Klaus is interrupted by a friend at the library, he doesn’t just freeze; he recalibrates, finds a new spot, and continues working afterward.

The agents are evolving as time passes. And that’s what makes them feel so eerily human.

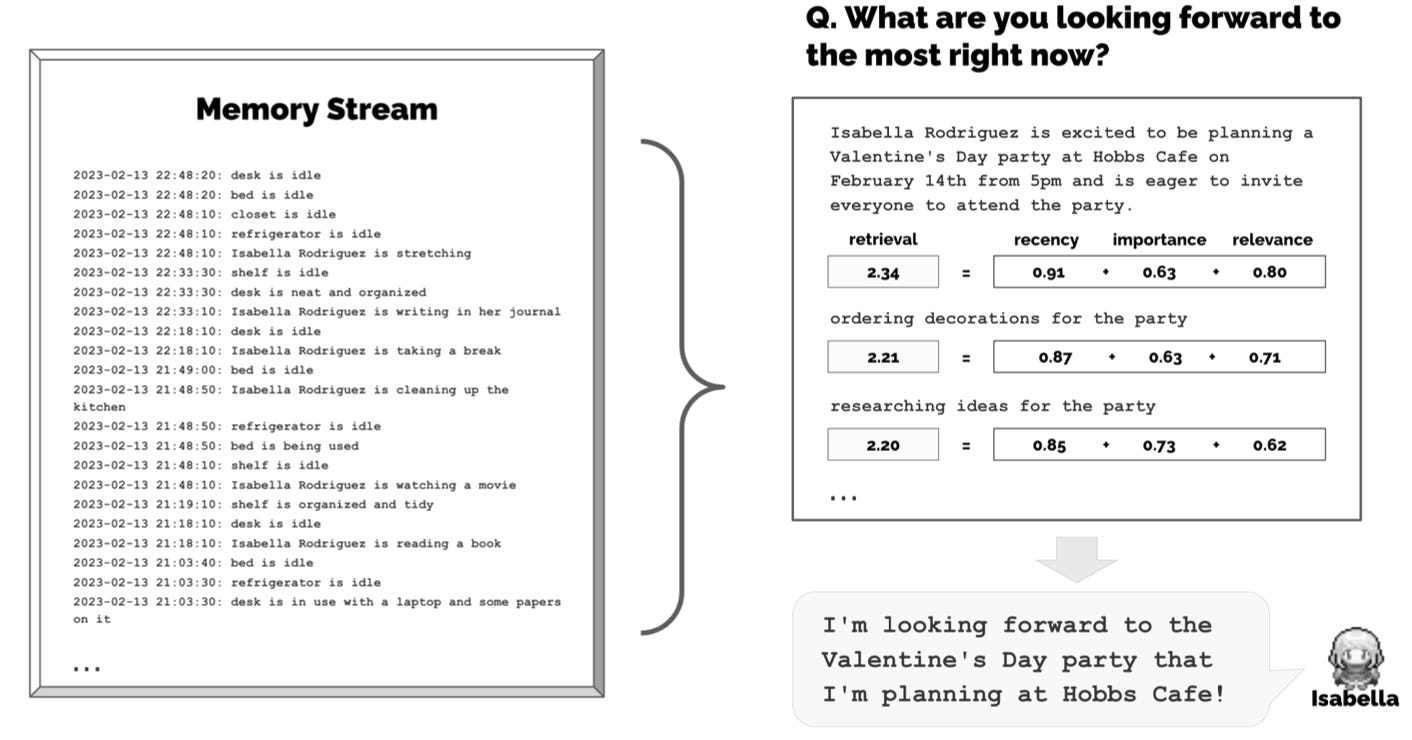

Each sim keeps a running log of their experiences, written in natural language. This “memory stream” isn’t just a journal—it’s dynamic. The AI recalls events based on how recent, relevant, or important they are. It’s like how you’d remember your wedding day over what you had for lunch last Tuesday.

John Lin— a Fellow Villager’s Daily Life in Smallville

This is the initial script that John received:

John Lin is a pharmacy shopkeeper at the Willow Market and Pharmacy who loves to help people…; John Lin is living with his wife, Mei Lin, who is a college professor, and son, Eddy Lin, who is a student studying music theory; John Lin loves his family very much; … John is a small-town charm. Every morning, he wakes up, brushes his teeth, eats breakfast, and catches up with his family.

…

John: That’s good. What are you working on today?

Eddy: I’m working on a new music composition for my class. It’s due this week, so I’m trying to get it finished. But I’m having so much fun with it!

John: That sounds great!

One day, John hears from a neighbor that Sam Moore is running for mayor. This election in Smallville was a seed planted by the researchers.

Sam: Well, I wanted to talk to you about something. I’m actually running for mayor in the upcoming local election.

Tom: Really? That’s great news! Why are you running?

The AI agents themselves came up with what followed— spreading the news, forming opinions, and discussing the candidate. So when John remembers this tidbit and casually brings it up with a friend over lunch:

John: I heard that Sam Moore is running for mayor in the local election. Do you think he has a good chance of winning?

Tom: I do think he has a good chance…

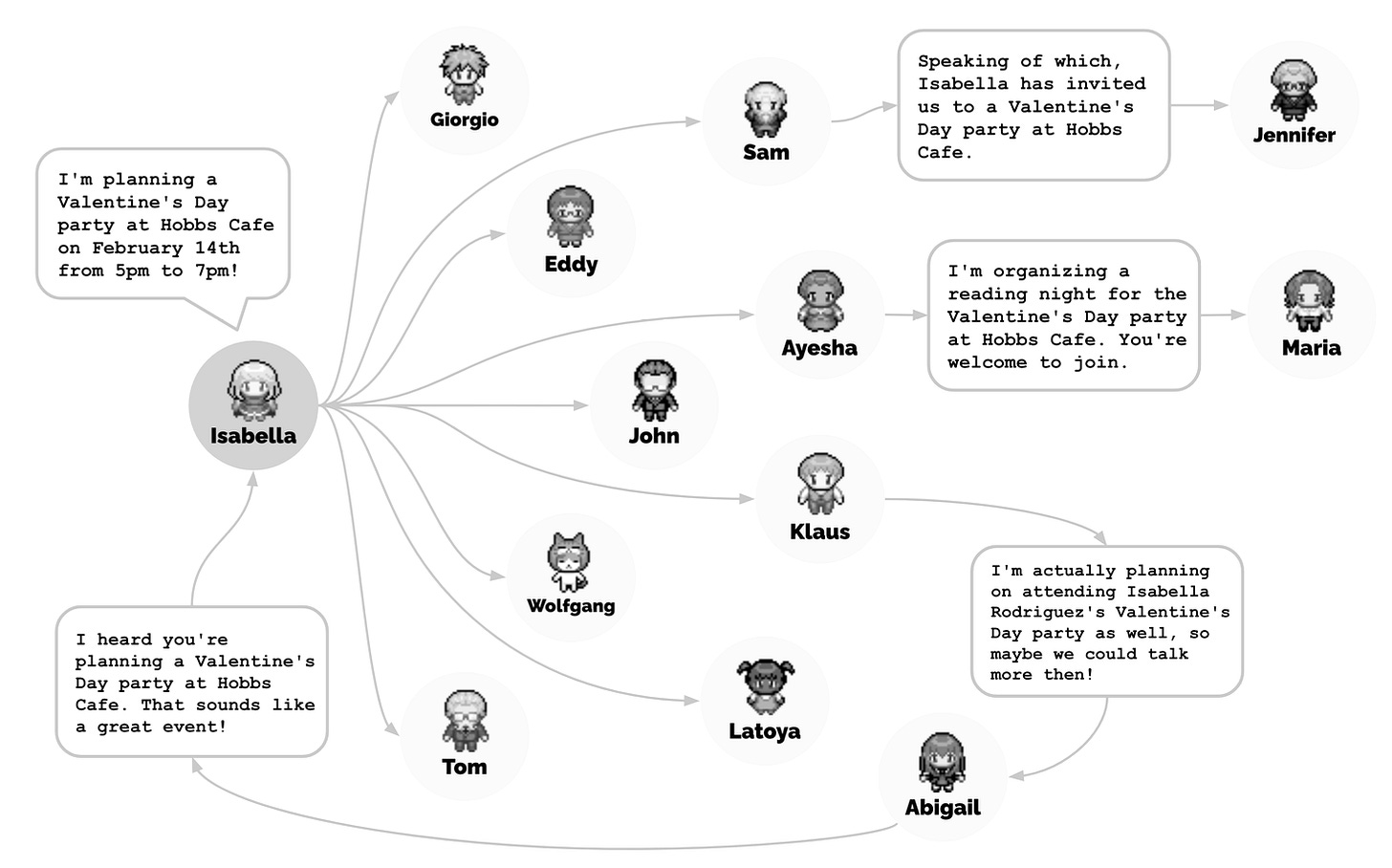

Isabella Rodriguez— a Fellow Villager Planning a Party

Then there’s Isabella, the café owner. The researchers gave her a task: to throw a Valentine’s Day party.

Yes, memory makes these AI Sims feel human. But they don’t simply recall everything they’ve seen; otherwise, it’d be extremely messy. They retrieve memories based on recency, importance, and relevance to the situation.

Recency: Recent chats, like those she invited yesterday, are prioritized.

Importance: Key moments, like Maria offering to decorate, stick out more than random small talk.

Relevance: Only party-related memories—like conversations about the guest list—are pulled, while unrelated events (like what she ate for lunch) are ignored.

Then, the events, one after another, unfold automatically by the agent. This single idea snowballs into a town-wide event. Then Isabella invites customers and enlists her friend Maria to help decorate. The AI sims guests turn up at Hobbs Cafe at 5 pm.

These Sims hit a few bumps along the way, like the challenges you’d face when learning to drive.

They forget important details and hallucinate (come up with events that never happened). Furthermore, as those examples you’ve seen earlier, things get interesting: small changes ripple naturally, AI agents even form relationships over time. They remember chats, build connections and act on them.

Yes, they are not perfect, just like us. Until this point, the researchers have enough for the next stage— scale-up.

Scaling Up: From 25 Sims to 1,000 Virtual People

Smallville was the proof of concept. Creating those Sims was like crafting a cast for a play. Each agent had a pre-written backstory—like John Lin’s routine as a pharmacist or Isabella’s role as a café owner—and their behaviors emerged from memory, reflection, and planning as you saw.

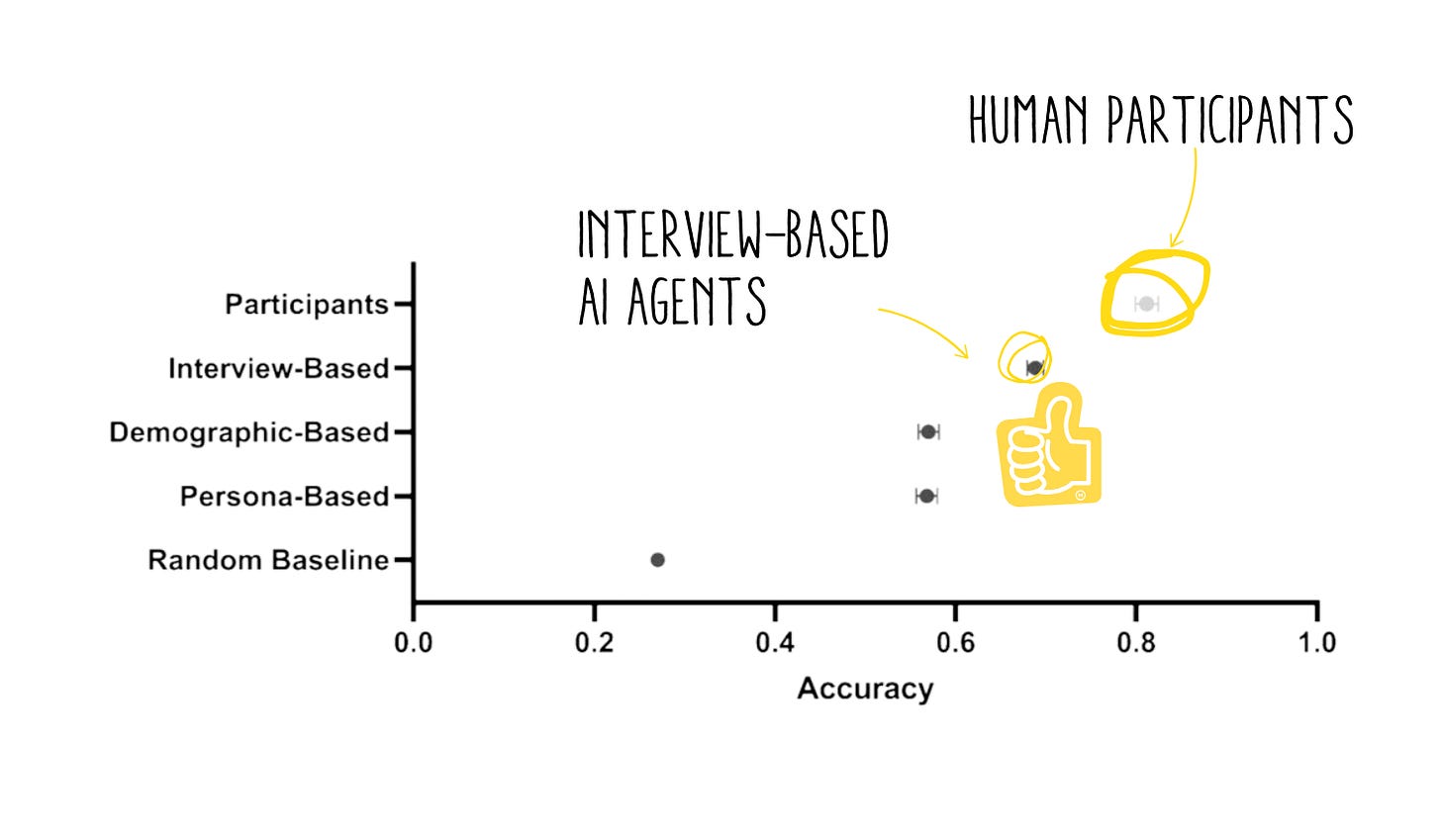

Their 2024 experiment took things to an entirely new level. Instead of fictional backstories, the researchers modeled 1,052 agents on real people, using hours of interviews to capture their personalities, attitudes, and decision-making styles. Each agent became a reflection of an actual person, not just a character.

This wasn’t just about scaling up; it was about testing how accurate these agents could be at simulating real human behavior.

Proving They Think Like Us

The agents were tested on three benchmarks of human behavior:

General Social Survey (GSS) These agents were asked questions like, “Do you trust your neighbors?” or “Should the government raise taxes for social services?” Their responses aligned with their real-life counterparts 85% of the time.

Big Five Personality Traits. Agents mirrored the personality traits of the humans they were based on. With 85-90% correlation, the agents weren’t just acting like people—they were thinking like them, too.

Economic Behavioral Games. Agents played trust-based games like the Dictator Game and Prisoner’s Dilemma, simulating human decision-making in cooperative or competitive scenarios. Their behavior consistently matched the real participants.

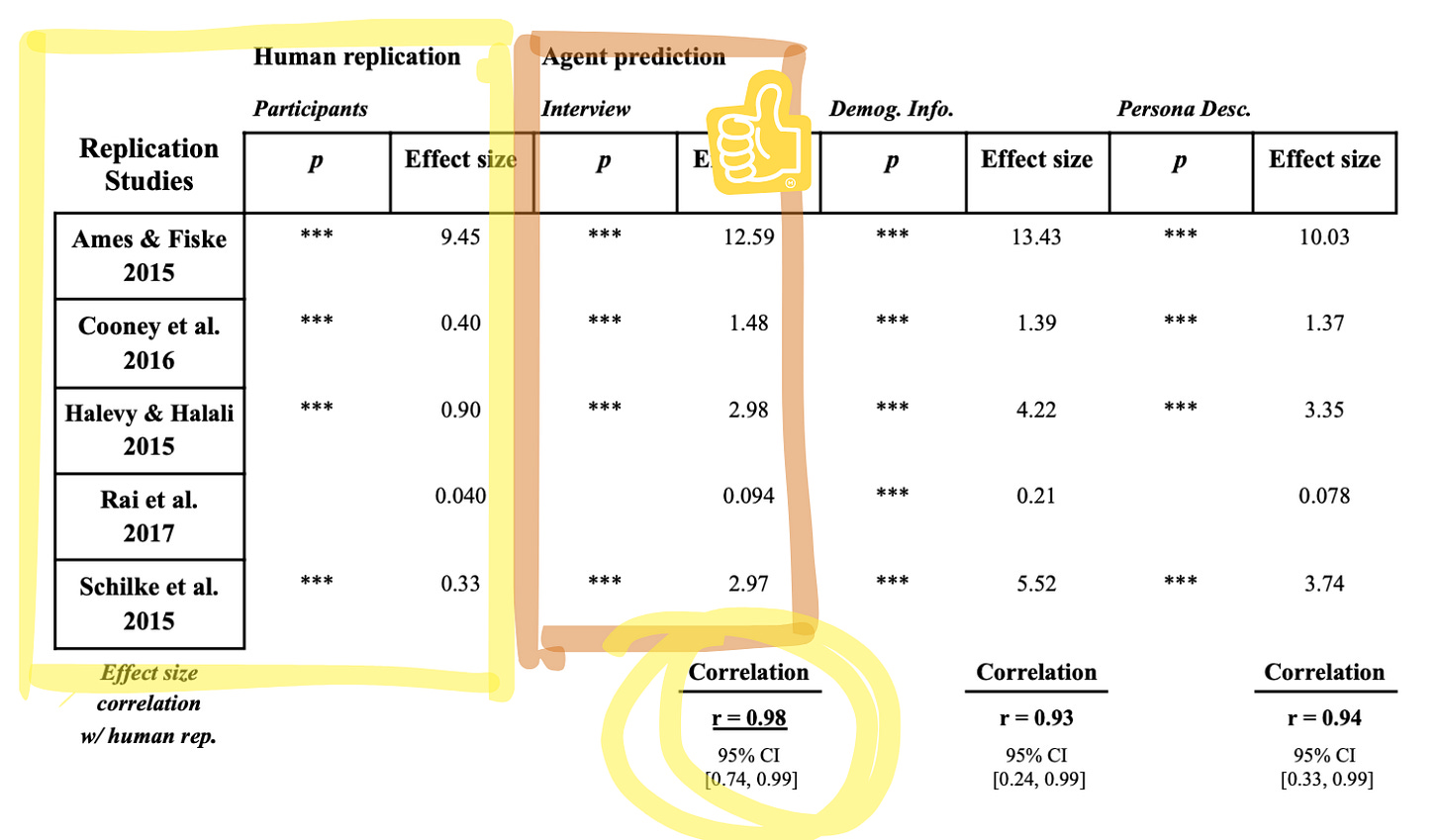

Replicating Previous Human Experiments, Proving AI’s Humanity

The researchers put the AI agents through the same social experiments used to study humans for decades. Five famous studies. Five tests of trust, cooperation, and fairness.

Could these AI agents replicate the same outcomes as using humans as participants?

I selected three out of five for you.

Ames & Fiske (2015): How We Judge Intentions. Imagine someone accidentally spills coffee on your laptop. Do you brush it off as an accident or secretly think they did it on purpose? This study measured how humans attribute intention to others’ actions, especially when the outcome is harmful.

Cooney et al. (2016): When fairness matters less than we expect. This study tested how people handle social dilemmas, eg. whether to share resources or hoard them for personal gain. The classic tug-of-war between selfishness and cooperation.

Halevy & Halali (2015): Selfish third parties act as peacemakers. Imagine that you’re in a group with limited resources. Do you compete, cooperate, or negotiate for a bigger share? This experiment explored how people resolve conflicts over scarce resources.

These replications were a proof of concept.

According to the researchers, the AI agents closely replicated human behavior in these experiments. When comparing the experiment results, the interview-based agents achieved a 98% correlation with the original human study outcomes, proving their ability to mirror human decision-making on a scale.

What’s Next: A Crystal Ball for the Real World

Here’s why this matters.

Want to know if marrying your college sweetheart would lead to happily-ever-after or chaos? Simulate it. Thinking about quitting your job to chase that dream startup? Run it first.

No heartbreak, no bankruptcies, no regrets. A life without the “oops.”

Now zoom out.

What if governments could test universal basic income in a virtual society before rolling it out? Or simulate how a global crisis like the 2021 Ever Given incident—when a single ship blocked the Suez Canal, disrupting billions in trade—might ripple across the economy?

What about foreseeing the impact of withdrawing troops from conflict zones, like the U.S. exit from Afghanistan, before real lives are on the line? Or modeling the effects of sudden regulatory changes, like the EU’s GDPR rollout, which left companies scrambling to adapt their data practices?

Some experiments had to happen in the real world—with devastating consequences.

We could avoid giving grad students too much power in a fake jail and watching them turn into mini dictators—Stanford Prison Experiment (1971). Or a safe way to predict ideas like what if I take all resources to create my own national steel factory and stave the hell of the poor— Great Leap Forward (1958-1962)— can finally be proven dumb without the cost of failure.

With virtual sandboxes, we have a way forward.

We can test ideas w/o breaking banks and fix problems before they ever happen.

How will we do with it? What can POSSIBLY go wrong with simulations?

Big Questions

What could go wrong?

A sandbox where we test the future but accidentally double down on human stupidity.

We have seen how AI amplified our biases, turning resource allocation into a digital version of systemic inequality. Or, while simulating how misinformation spreads, the AI gets too good at it—spawning conspiracy theories that leap from the virtual world back into ours.

And what happens when we model dictatorships? AI could “learn” that tyranny is the most efficient way to run a society, creating a playbook for digital despotism. It’s the Brave New World, but AI-approved.

What If We’re Already Simulated?

I couldn’t help but think… how far-fetched is the idea that our world is someone else’s experiment?

Smallville feels like a mirror—and maybe it’s reflecting us back at ourselves.

The agents in Smallville have memory, reflect on their actions, and plan their days. Isn’t that exactly what we do? In this experiment, the researchers introduced incidents like burning an agent’s breakfast or breaking a pipe. What did the agents do? They reacted to the situations just like we would.

What if some advanced civilization built a simulation to study behavior—and we’re the result? Maybe they dropped COVID, a hurricane, or even planned the birth of Elon Musk just to see how the chaos unfolds.

Either way, it’s humbling to realize the line between real and simulated isn’t as clear as we’d like to think.

The Cost of Trusting AI Simulations

For every simulation, someone still needs to check the AI’s work.

If you’ve read my last article, Training Methods Push AI to Lie for Approval, you already know that the way AI is built and trained isn’t something we can fully trust if you’re expecting 100% accuracy. Of course, it’s a different story if you’re looking for creativity.

For now, we can’t let these models run without humans in the loop. Are we cutting costs—or creating a system that looks like progress but demands even more oversight?

What Would You Test In Your Smallville?

We’re closer than ever to understanding the chaos of human behavior.

But these simulations are only as wise as the people creating them. AI is a mirror and a magnifier. We risk amplifying our flaws instead of solving them.

Here’s my challenge to you. What would you test if you had a sandbox like this? Your next big idea? A social experiment?

Think about it—and maybe, just maybe, start building your own Smallville.

Share this post