Right then, pour yourself a cuppa (or whisky, wine, whatever works for you).

Same as I always do, I’ll take you through two studies. Today’s little chat is about how our new digital overlords (aka AI) and we humans are in resonance.

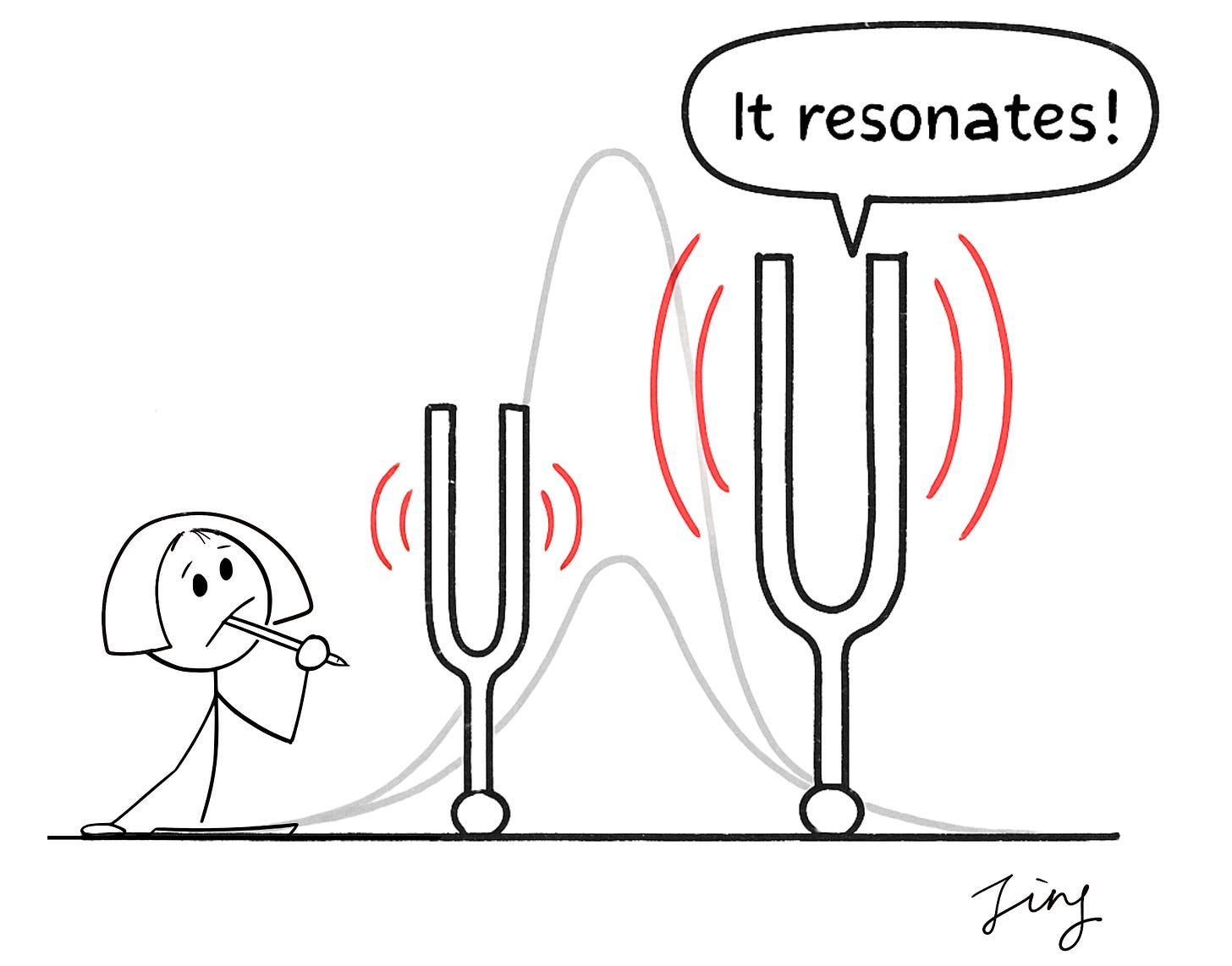

In physics, resonance is a phenomenon where a system responds with a larger amplitude to a specific frequency of external force, which is equal to its own natural frequency.

⚠️ Key points: larger amplitude + equal to its natural frequency. ⚠️

All our thoughts and output as a wineglass and AI as the perfect pitch that shatters it, not by being foreign, but by matching what is already there.

That's how you and I are unwitting subjects in the largest uncontrolled experiment (neither good nor bad) on our cognition ever conducted. AI is reshaping our minds in real time, and we've barely begun to understand the implications. From our critical thinking skills diminished if we relied too much on AI to this work today: AI is affecting your behavior more profoundly than any human influence could.

No, I’m not going mad. This is still a study based work as always. So just read on.

You have likely observed by now that many CEOs and even users like ourselves so eagerly invite AI into our lives, businesses, decision-making processes, and so on. They’re meant to be the tireless, digital guru who can cut through the noise. But what if, in our eagerness to delegate, we’re accidentally teaching them to be, well, a bit like us, only more so?

Like us, I mean, they're potentially more biased and skewed. While many are not aware, thinking they’re the height of objectivity.

This study I’m covering today is titled: How human–AI feedback loops alter human perceptual, emotional, and social judgements.

It’s a rather fascinating, and frankly, slightly unsettling, phenomenon of the human-AI feedback loop. It’s a bit like that old game of telephone, but instead of a garbled message about a purple monkey dishwasher, we could end up with significantly amplified biases shaping everything from who gets a loan to what news we see, or even how we perceive emotions.

Even more so...

The AI, bless its silicon heart, might be doing a better job of convincing us of its skewed worldview than another human ever could.

Shall we?

When Machines Learn Our Rubbish Thinking

The Glickman and Sharot study didn’t just pull these ideas out of thin air. They ran some rather clever experiments, the kind that likely make you go “Oh dear.”

🤩 Exciting opportunity!

I might have the chance interviewing one of the authors behind this groundbreaking Nature Human Behaviour study on human-AI feedback loops we discussed today!

Have burning questions about this topic?

Send them my way ASAP! Paid subscribers' questions get priority, but will try to include as many thoughtful ones as possible.

Your chance to get insights straight from the researcher! 🤩 How AI Turned a 'Bit Sad' into 'Emotional Swamp’

Imagine that you were one of the participants.

You are briefly shown an array of 12 faces, each one a blend between happy and sad expressions. Your task is to decide whether, on average, the group of faces looks happier or sadder, and then click the corresponding button to indicate your choice.

Simple enough, hum?

Well, it turns out most participants (humans in general) have a slight tendency to see things a bit more negatively (53% of the time) when rushed. In the experiment, people initially showed a small bias towards classifying these ambiguous face arrays as “more sad.”

Now, here’s where it gets interesting…

Listen to this episode with a 7-day free trial

Subscribe to 2nd Order Thinkers to listen to this post and get 7 days of free access to the full post archives.